Compositing video frames

I gave a workshop on my Light Painting technique at Oxford Hackspace in February 2015. In preparation for the evening, I captured a high-speed movie of my light strip painting an image. To help illustrate what’s happening, I though it would be nice to be able to see the image being built up in ‘real time’.

After a bit of thought, I’ve come up with a quick and dirty way of building up an image from a series of video frames. Beware, this will only work well when the background in the image is dark.

Here’s the video before composition.

First, use ffmpeg (you can get it via MacPorts)

ffmpeg -i infile.mov ~/folder/out%04d.jpg

Algorithm

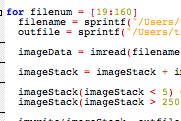

The code is written in Octave (although the algorithm should work for other languages if you prefer). It simply loops through the images, and adds them together. The subtle thing that I added is to throw away the pixels with values less than 70 counts (since they were dark in my images). In addition, you also need to ensure that no pixel exceeds 255 counts (assuming 24bit images) since they’ll look weird when they’re recoded back into video.

filenum = 0;

imageStack = zeros(720, 1280, 3);

for filenum = [19:160]

filename = sprintf("~/folder/out%04d.jpg", filenum)

outfile = sprintf("~folder/stacked/%04d.jpg", filenum);

imageData = imread(filename);

imageStack = imageStack + imageData .* (imageData > 70); %Ignore very dark pixels since they'll just add noise

imageStack(imageStack > 250) = 250; %make sure it's not clipping

imwrite(imageStack, outfile)

endfor

Once you’ve got the images and are happy with them, convert them back to a movie with ffmpeg.

ffmpeg -r 20 -pix_fmt yuv420p -f image2 -s 1280x720 -i ~folder/stacked/%04d.jpg -vcodec libx264 outmov.mp4

And that’s it: simple.

Here’s the final result